Unlike previous versions of DirectX, the difference between the new DirectX and previous generations are obvious enough that they can be explained in charts (and maybe someone with some visual design skill can do this).

This article is an extreme oversimplification. If someone wants to send me a chart to put in this article, I’ll update. Smile

Your CPU and your GPU

Since the start of the PC, we have had the PC and the GPU (or at least, the “video card”).

Up until DirectX 9, the CPU, being 1 core in those days, would talk to the GPU through the “main” thread.

DirectX 10 improved things a bit by allowing multiple cores send jobs to the GPU. This was nice but the pipeline to the GPU was still serialized. Thus, you still ended up with 1 CPU core talking to 1 GPU core.

It’s not about getting close to the hardware

Every time I hear someone say “but X allows you to get close to the hardware” I want to shake them. None of this has to do with getting close to the hardware. It’s all about the cores. Getting “closer” to the hardware is relatively meaningless at this point. It’s almost as bad as those people who think we should be injecting assembly language into our source code. We’re way beyond that.

It’s all about the cores

Last Fall, Nvidia released the Geforce GTX 970. It has 5.2 BILLION transistors on it. It already supports DirectX 12. Right now. It has thousands of cores in it. And with DirectX 11, I can talk to exactly 1 of them at a time.

Meanwhile, your PC might have 4, 8 or more CPU cores on it. And exactly 1 of them at a time can talk to the GPU.

Let’s take a pause here. I want you to think about that for a moment. Think about how limiting that is. Think about how limiting that has been for game developers. How long has your computer been multi-core?

But DirectX 12? In theory, all your cores can talk to the GPU simultaneously. Mantle already does this and the results are spectacular. In fact, most benchmarks that have been talked about have been understated because they seem unbelievable. I’m been part of (non-NDA) meetings where we’ve discussed having to low-ball performance gains to being “only” 40%. The reality is, as in, the real-world, non-benchmark results I’ve seen from Mantle (and presumable DirectX 12 when it’s ready) are far beyond this. The reasons are obvious.

To to summarize:

DirectX 11: Your CPU communicates to the GPU 1 core to 1 core at a time. It is still a big boost over DirectX 9 where only 1 dedicated thread was allowed to talk to the GPU but it’s still only scratching the surface.

DirectX 12: Every core can talk to the GPU at the same time and, depending on the driver, I could theoretically start taking control and talking to all those cores.

That’s basically the difference. Oversimplified to be sure but it’s why everyone is so excited about this.

The GPU wars will really take off as each vendor will now be able to come up with some amazing tools to offload work onto GPUs.

Not just about games

Cloud computing is, ironically, going to be the biggest beneficiary of DirectX 12. That sounds unintuitive but the fact is, there’s nothing stopping a DirectX 12 enabled machine from fully running VMs on these video cards. Ask your IT manager which they’d rather do? Pop in a new video card or replace the whole box. Right now, this isn’t doable because cloud services don’t even have video cards in them typically (I’m looking at you Azure. I can’t use you for offloading Metamaps!)

It’s not magic

DirectX 12 won’t make your PC or XBox One magically faster.

First off, the developer has to write their game so that they’re interacting with the GPU through multiple cores simultaneously. Most games, even today, are still written so that only 1 core is dedicated to interacting with the GPU.

Second, this only benefits you if your game is CPU bound. Most games are. In fact, I’m not sure I’ve ever seen a modern Nvidia card get GPU bound (if anyone can think of an example, please leave it in the comments).

Third, if you’re a XBox One fan, don’t assume this will give the XBO superiority. By the time games come out that use this, you can be assured that Sony will have an answer.

**Rapid adoption **

There is no doubt in my mind that support for Mantle/DirectX12/xxxx will be rapid because the benefits are both obvious and easy to explain, even to non-technical people. Giving a presentation on the power of Oxide’s new Nitrous 3D engine is easy thanks to the demos but it’s even easier because it’s obvious why it’s so much more capable than anything out there.

If I am making a game that needs thousands of movie-level CGI elements on today’s hardware, I need to be able to walk a non-technical person through what Nitrous is doing differently. The first game to use it should be announced before GDC and in theory, will be the very first native DirectX 12 and Mantle and xxxx game (i.e. written from scratch for those platforms).

A new way of looking at things: Don’t read this because what is read can’t be unread

DirectX 12/etc. will ruin older movies and game effects a little bit. It has for me. Let me give you a straight forward example:

Last warning:

Seriously.

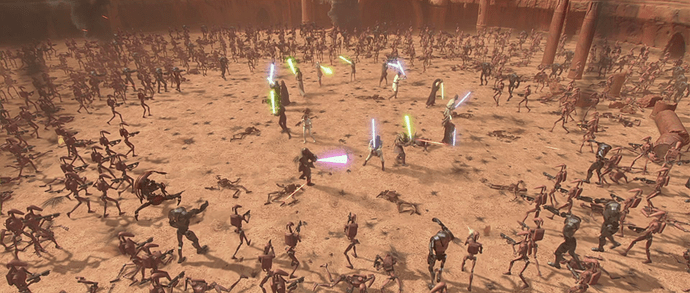

Okay. One of the most obvious limitations games have due to the 1 core to 1 core interaction are light sources. Creating a light source is “expensive” but easily done on today’s hardware. Creating dozens of light sources simultaneously on screen at once is basically not doable unless you have Mantle or DirectX 12. Guess how many light sources most engines support right now? 20? 10? Try 4. Four. Which is fine for a relatively static scene. But it obviously means we’re a long long way from having true “photo realism”.

So your game might have lots of lasers and explosions and such, but only (at most) a few of them are actually real light sources (and 3 of them are typically reserved lighting the scene).

As my son likes to say: You may not know that the lights are fake but your brain knows.

You’ll never watch this battle the same again.

Or this. Wow, those must be magical explosions, they don’t cast shadows…Or maybe it’s a CGI scene…

And once you realize that, you’ll never look at an older CGI movie or a game the same because you’ll see blaster shots and little explosions in a scene and realize they’re not causing shadows or lighting anything in the scene. You subconsciously knew the scene was “fake”. You knew it was filled with CGI but you may not have been able to explain why. Force lightning or a wizard spell that isn’t casting light or shadows on the scene may not be consciously noticeable but believe me, you’re aware of it (modern CGI fixes this btw but our games are still stuck at a handful).

Why I’ve been covering this

Before DirectX 12, I had never really talked about graphics APIs. That’s because I found them depressing. My claim to fame (code-wise) is multithreading AI programming. I wrote the first commercial multithreaded game back in the 90s and I’ve been a big advocate of multithreading since. GalCiv for Windows was the first game to make use of Intel hyperthreading.

Stardock’s games are traditionally famous for good AI. It’s certainly not because I’m a great programmer. It’s because I have always tossed everything from path finding to AI strategy onto threads. The turn time in say Sorcerer King with > 1000 units running around is typically less than 2 seconds. And those are monsters fighting battles with magical spells and lots of pathfinding. That’s all because I can throw all this work onto multiple threads that are now on multiple cores. In essence, I’m cheating. So next time you’re playing a strategy game where you’re waiting 2 minutes between turns, you know why.

But the graphics side? Depressing.

That magical spell is having no affect on the lighting or shadows. You may not notice it consciously but your brain does (DirectX 9). A DirectX 10/11 game would be able to give that spell a point light but as you can see, it’s a stream of light which is a different animal.

You don’t need an expert

Assuming you’re remotely technical, the change from DirectX 11 to DirectX 12/Mantle changes are obvious enough that you should be able to imagine the benefits. If before only 1 core could send jobs to your GPU but now you could have all your cores send jobs at the same time, you can imagine what kinds of things can become possible. Your theoretical improvement in performance is (N-1)X100% where N is how many cores you have. That’s not what you’ll really get. No one writes perfect parallelized code and no GPU is at 0% saturation. But you get the idea.

GDC

Pay very very close attention to GDC this year. Even if you’re an OpenGL fan. NVidia, AMD, Microsoft, Intel and Sony have a unified goal. Something is about to happen. Something wonderful.

[SIZE=1]DirectX 11 vs. DirectX 12 oversimplified[/SIZE]